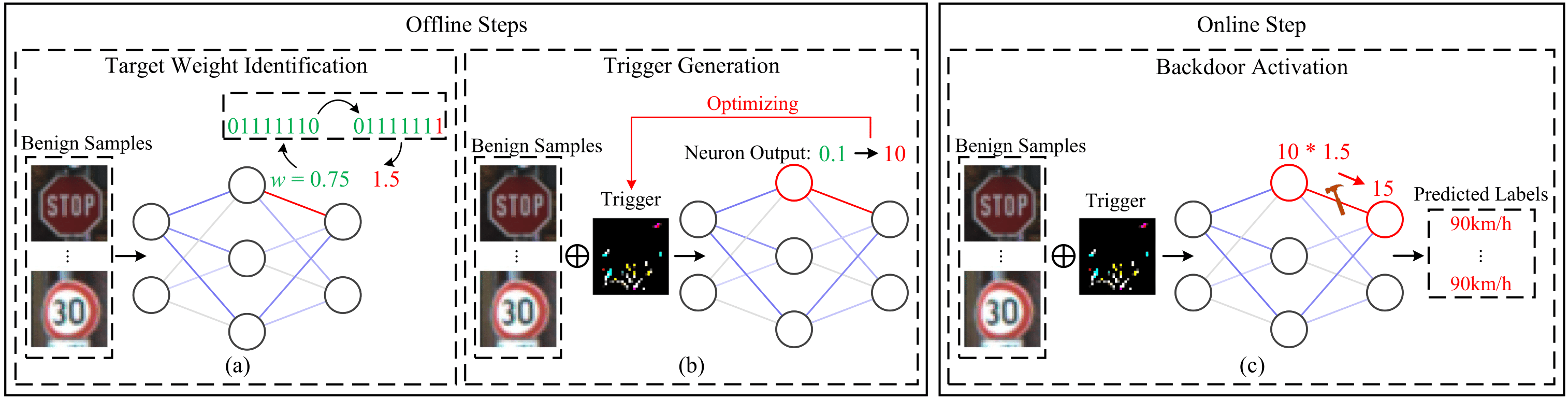

What Are the Prerequisites?

For someone to inject backdoors into AI systems using this method, they would need:

1. Co-location on the target machine. The attacker needs to run their attack code on the

same physical computer that hosts the victim AI model. This could happen through malware

infection, compromised cloud instances, or malicious processes in multi-tenant environments like

shared GPU servers or edge computing platforms.

2. Vulnerable memory hardware. The target machine must have DRAM susceptible to Rowhammer

attacks, which includes most DDR3 and DDR4 memory modules currently in use. The attacker needs

to identify memory cells that can be reliably flipped through repeated memory access patterns,

which is possible on billions of devices worldwide.

3. Model architecture knowledge. The attacker requires white-box access to understand the

AI model's structure, weight locations in memory, and a small set of sample data for

optimization. This information could be obtained through reverse engineering, insider access,

leaked model files, or by targeting open-source AI deployments.

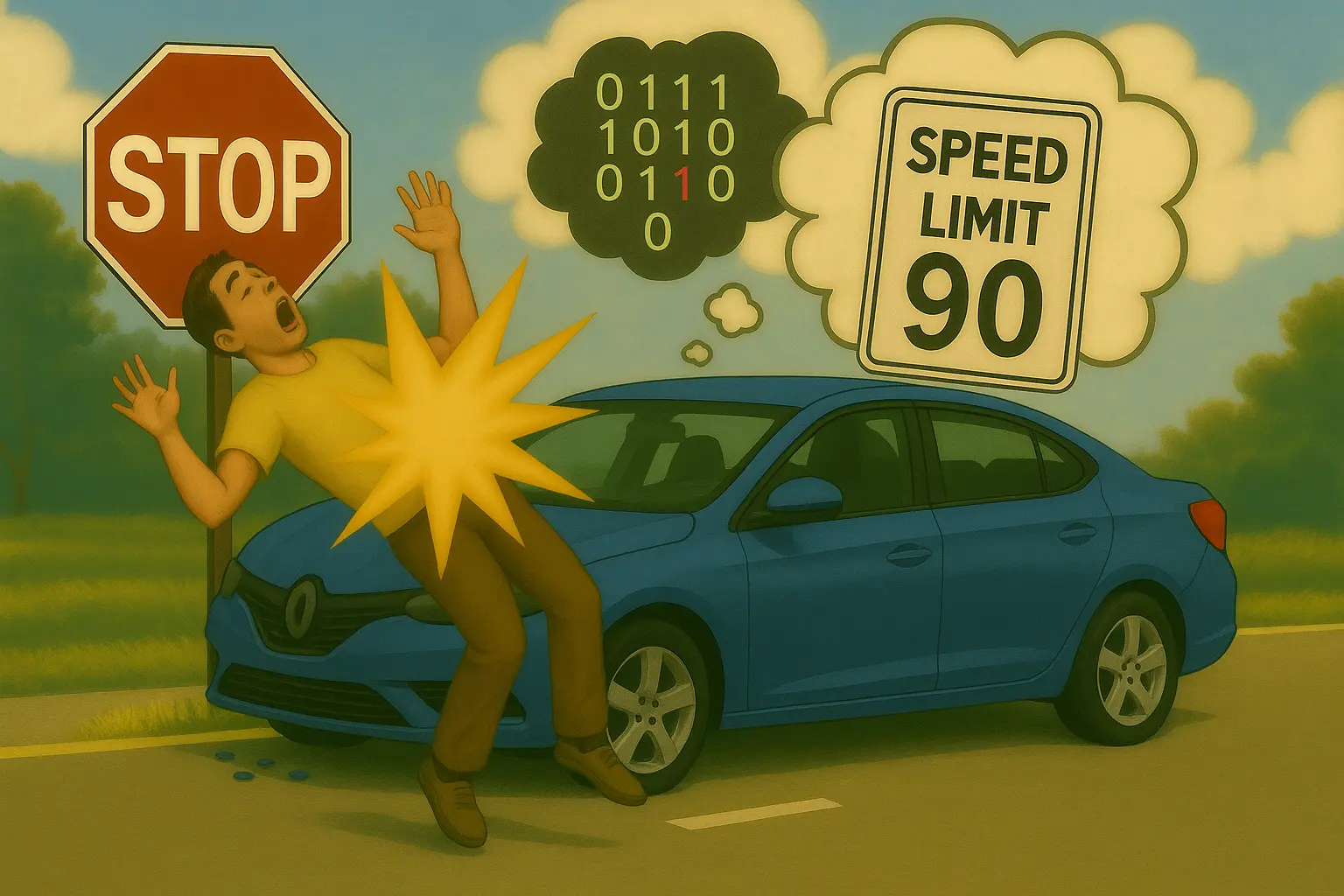

4. Trigger injection capability. After successfully flipping the target bit, the attacker

must be able to feed specially crafted inputs containing their optimized triggers to the

compromised AI model. This could happen through normal user interfaces, API calls, or by

compromising data pipelines that feed into the AI system.